Palestine motivation Tik Tok mega mix. Part 2. 20231014-20240209

Today is “International Day of Solidarity with the Palestinian People” which is perfect timing. I recently wrote a letter to a friend, to rebut some of the criminally lazy Zionist talking points that are making the rounds, and I thought I would post a generic version of this letter for my loyal audience.

Dear confused Zionist, ...Before I address each of your claims, point by point, I want to say that the allegations against Israel are incredibly serious and it bears considering whether you are so sure about what you're saying that even allegations of genocide and torture, do not warrant from you a couple earnest hours of steel manning the critical literature? -------------------- Claim 1: "the IDF are not rapists and thugs". The IDF is a conscript army comprising all kinds of people, from the well intentioned to the extreme psychopath. That's the reality of armies. Does the IDF have a well documented history of sexual violence? Yes. Both inside the IDF and against Palestinians. And it's a big problem. A major component is probably coming from these "Rabbinical Fatwas" ordained by the IDF chief rabbi's interpretation of extreme statements about "goyim" found in the Talmud. It's indefensible. https://www.timesofisrael.com/idf-taps-chief-rabbi-who-once-seemed-to-permit-wartime-rape/ https://www.jpost.com/israel-news/article-691641 https://jordantimes.com/opinion/ramzy-baroud/untold-story-abuse-palestinian-women-hebron https://www.arabnews.com/node/299646 Claim 2: "Hamas brainwashes children". Let's say this is true, there is a context that facilitates this: the total annihilation of hope imposed on young people in Palestine by a brutal siege. Further, one could easily say the same thing about Israel, which pours enormous resources into propaganda. Hamas has also taken steps to tone down its initial charter, such that it is more focused on self-determination for Palestinians, and not the destruction of Jews. That is a fact. This particular fact is anti-Jewish to the extent that Judaism is relentlessly conflated with Zionist expansion for propaganda purposes. It is true that Hamas is explicitly anti-Zionist by any means available, and they do want to dismantle the state of Israel. After watching the genocide in Gaza for the last 50 days I think it's fair to say that it is not just Hamas that isn't in love with the state of Israel. It's also worth acknowledging that international law does allow for people under military occupation to fight back. And Gaza is effectively an occupation due to it being enclosed, even if Israel is not the day to day authority. Also, the origin story of Hamas is hardly something Israelis can honestly sweep under the rug. Started by the orphans of Zionist massacres. Nurtured by people like Netanyahu to undermine Palestinian moderates. "Hamas, in their 1988 covenant, purposefully fused these two struggles into one against the Jews and Israel.[47][45]...In contrast, the 2017 covenant denies the connection in its only direct mention of Jews everywhere..." https://en.wikipedia.org/wiki/Hamas_Charter https://en.m.wikipedia.org/wiki/Right_to_resist Claim 3: "since 1948 there has been non-stop terrorism aimed at Israel". Nobody serious supports terrorism, like for example, the attack on the King David hotel, or Count Bernadotte being assassinated by the Stern gang, as well as suicide bombers. Are the non-stop expansionist policies that simply allow settlers to steal the land and houses of the indigenous Palestinians a form of terrorism? Palestinians feel terrorized by it, and it's all illegal under international law and has been condemned many dozens of times by the UN. Let's get serious about the direction that most of the terrorism is being directed. https://en.m.wikipedia.org/wiki/List_of_United_Nations_resolutions_concerning_Israel Claim 4: "the Palestinians have rejected all these various peace plans over the years, so this is their own fault". In each case there were excellent reasons to reject these "offers". For example, if you actually look at the offer made at the Camp David accords, it was completely grotesque, and the very definition of Apartheid. Even the minister of foreign affairs Shlmo Ben Ami later admitted it was a shit deal. https://en.m.wikipedia.org/wiki/Israeli%E2%80%93Palestinian_peace_process Claim 5: "expect to have been raped just for raving". Possibly, but Israel has provided very little evidence of this, took almost no measures to preserve or disclose that evidence, and have already had many aspects of their account of Oct 7 discredited. There were no "40 beheaded babies" discovered, nor any babies found in ovens, for example. Yes, rape might happen during a big prison break, which is part of why it would be better to not imprison the people of Gaza. The primary tactics employed by Hamas relate to defensive urban combat, not sexual violence. https://www.theguardian.com/world/2023/nov/10/israel-womens-groups-warn-of-failure-to-keep-evidence-of-sexual-violence-in-hamas-attacks https://m.jpost.com/israel-news/article-772181 Claim 6: "Hamas gets millions of dollars from Iran and have helicopters, millions of guns, and all the basic necessities of life they could want or need". Even if Iran supplies Hamas with millions of dollars, and training, and "helicopters", this is an absolute joke compared to western support of Israel, and Israel punishingly controls what goes in and out of Gaza. A Gazan can't even buy a surfboard, let alone threaten Israel with some helicopter. Ridiculous. https://www.npr.org/2023/10/26/1208456496/iran-hamas-axis-of-resistance-hezbollah-israel https://www.haaretz.com/israel-news/2023-11-18/ty-article/.premium/israeli-security-establishment-hamas-likely-didnt-have-prior-knowledge-of-nova-festival Claim 7: "Israel's prisoners were violent criminals, not innocents like Hamas's hostages". The majority of Israel's returned hostages were held without charge as administrative detainees. Many were children when they were taken. The "violent crimes" of these people are very difficult to evaluate since Palestinians in Gaza and the West Bank do not have access to the same level of justice as Israeli citizens. One woman, Israa Jaabis, was returned by Israel burned terribly all over her body, having been held for attempted murder, but all we know is that she was in a car accident that resulted in her getting burned up, and which may have been a suicide by cop attempt. Okay. That's possibly a violent criminal given her circumstances? And what about all the children Israel was holding hostage? Children alleged to have been tortured by solitary confinement at least. "A Save the Children report earlier this year found that the majority of Palestinian children detained by the Israeli military who we consulted experienced physical and emotional abuse, including being beaten (86%), threatened with harm (70%), held in solitary confinement (60%) or hit with sticks or guns (60%). Some children reported sexual violence and abuse, and 69% reported being strip searched during interrogation." https://reliefweb.int/report/occupied-palestinian-territory/save-children-condemns-exploitation-children-geopolitical-end-first-group-child-hostages-and-child-detainees-released https://www.forbes.com/sites/willskipworth/2023/11/25/israel-released-39-palestinian-prisoners-heres-what-we-know-about-them/ Claim 8: "there is a massive surge in anti-semitism around the world, which just goes to show how serious Israelis need to take their own protection". There is definitely a massive surge in anti-Zionism. Many of the protests are being led by Jewish groups, and leading Israel critics are Jewish themselves. Anti-semitism is routinely denounced at protests and the protestors have massively established this as a norm. The idea that the protests in the west are pro Hamas, as opposed to pro Palestinian self-determination, is complete bullshit, and references aren't needed for that since I can simply point anyone to my Jewish friends who attend and help organize these protests. -------------------- Now, I have responded to each of your claims in good faith, and in return you will do the same for me out of respect. Claim 1: Israelis are denying medical treatment to innocent civilians in Gaza, despite it being very possible to deliver this aid without it going to Hamas. So imagine having this conversation not with me but with a child needing a limb amputation and having no anesthetic. The international community is effectively unanimous in saying that Israel is inflicting this pain as a form of collective punishment. https://press.un.org/en/2023/sc15473.doc.htm Claim 2: Israeli leaders have openly been calling for war crimes to be committed. They want to "level" Gaza. They muse openly about nuking the place. The IDF uses banned munitions like white phosphorous. They've been caught using human shields. They have been attacking "soft targets" like hospitals and schools without providing any evidence or just half-assed post-hoc joke evidence. Do you believe that international laws about war crimes, established in the aftermath of the holocaust, are optional suggestions? Claim 3: Israel is being led by a psychopath, who is already being tried on corruption charges. Netanyahu has a long history of being the worst. Nobody likes him and he has long been implicated in war crimes along with earlier brutes like Ariel Sharon and Menachem Begin. Do you actually like the Likud party? Do you agree with Netanyahu's recent attacks on the independence of the supreme court of Israel? https://en.wikipedia.org/wiki/Israeli_war_crimes https://www.aljazeera.com/news/2023/11/15/lawyers-for-gaza-victims-file-case-at-international-criminal-court Claim 4: Israel is trying to make us hate Arabs and deny us our freedom of speech to criticize Israel. Do you think that people should be losing their jobs, just because they express horror at the genocide they are seeing with their own two eyes through their phones? Sincerely, Me. For more media: https://wootcrisp.com/favourite-tik-tok-videos

As a complete breakdown of the moral credibility of the “west” looms, due to Israel’s direct assault on civilization that began a month ago, we must all consider the robustness of our beloved internet. Deep fakes, intimidation, censorship, and surveillance, hint at world with wildly out of proportion effects from even small countries. That problem doesn’t go away no matter what you think of Israel and Palestine: how do we survive the lack of trust and lack of plans and lack of coherent leadership?

Better educating ourselves about mesh grids, digital signatures, and forming effective polycules, needs to be part of our collective learning diets immediately if we plan to survive very long. With that in mind, EOS was a crypto currency (token, project) I had to learn how to use at one point, and it was extremely interesting. It was a pioneer in developing an idea called “delegated proof-of-stake”. In theory, it solves the environment problem of Bitcoinesque proof-of-work cryptocurrencies by users staking their “tokens” with “delegates” who do the work of verifying transactions among their subordinate stakeholders. The delegates can, in principle, reverse transactions if needed, by taking a vote among the top ~25 stakeholding delegates. Much competition for those top spots. I also remember people trying to smear EOS as “political” a lot, but it brought up some very important issues, such as when trying to get “it” to settle on its own constitution. It seemed to get hijacked away quickly from people who saw it as a utopian wellspring from which the goods of technology can actually get distributed to everyone fairly. Cold, hard, developer “bounties” only, seemed to be winning when I last read about it. Sad imho. I would like to know more about the inside story of the EOS project. I will look into it when I have more time.

It’s very difficult to answer this question. Take this article by Vitalik Buterin in 2021 as a starting point for your thoughts. Read to you by me:

So you see how complicated this is really.

Partly in the hope of selling my votecoin.com domain name at some point I occasionally take notes on its GitHub page. I can say confidently, that just the philosophical implications of the technical details of an idea like voting with cryptocurrency is enough to intimidate. It’s hard to remember what the exact plan related to it is really.

ChatGPT related, part three.

I really haven’t been able to keep up with much of the technical mechanics of all the different “large language models” everyone is so rightly interested in these days. Therefore, my opinions here might not be fair to the widespread idea that the interesting recent “AI” model outputs we’re seeing, arise from “unexpected” and emergent phenomena, once these models are scaled up to the tens of billions in their number of tunable parameters. The story, as I have it, is that harvesting all the text on the public internet, as well as other various sources of text, and using this text data to train models of word prediction—i.e. predicting the next 1–3 most likely words that historically would come next—and then scaling up these models in size, afforded by using selective attention mechanisms, is responsible for significantly improved word prediction accuracy, or lengthening the distance into the future words predicted to appear in a sentence or paragraph. The important scientific claim being that these models “somehow” jump from “just” predicting words, to intelligently applying knowledge learned from predicting words to shape functional roles for those words, effectively bootstrapping a recurrent process of incremental “semi-supervised” improvement using feedback from the iterated predictions. That’s roughly what I think is the dominant narrative about what is happening with these models.

I think that’s probably true a bit. But, I think there’s a less exciting interpretation, or alternative hypothesis at least, that might be generously garnered from Noam Chomsky’s comments on this topic. I admit that I’m conjecturing all this from ridiculously truncated soundbites that I vaguely remember from a couple months ago, but ignorance also excusably facilitated by little obstacles like New York Times paywalls for example. His stance appeared to me to fit a classic pattern of needlessly cantankerous and cryptic positions, but, positions that are also very easy to misunderstand, and that have previously become honeypots for intellectuals that underestimate how well Chomsky usually covers his bases. I believe he referred to these “LLM” models as producing types of glorified plagiarism, or something like that. As nobody has the time to look into the nuance of statements like these, that don’t seem to directly address the central issue of “emergent” language entities, probably nobody has looked into the matter further, and just moved on. “Chomsky is getting old, and last I came across him he seemed to be late in understanding the Ukraine situation, so he must be just babbling or something due to age, so I’ll just see what’s up on Tik Tok again”, someone might say upon seeing the issue come up derisively in r/machinelearning or Scott Aaronson’s blog. As I say though, it is my experience that Chomsky often has a safe, wise, conclusion in mind when he says something easily perceived as provocative. Even with his Ukraine opinions, one could say he had a responsibility to be last on board with anything, so he wasn’t wrong at all about being very skeptical about what was happening with that.

In this case of recent LLM performance, I think one could connect his seeming dismissiveness to some of his previous “infuriating” positions on the topic of our “cognitive limits”. Nobody likes that topic I find, because it doesn’t feel like it could possibly feel mentally stimulating to study something that immediately is impossible to study. But what if GPT, scaled up an order of magnitude, is really just exceptionally good at plagiarism, like he’s saying? Where we as individuals just don’t know all the different writings there have been on millions of topics, so we just can’t really see the plagiarism, unless on a topic we’re intimately familiar with? Our individual cognitive limit is exceeded in this case, so we’re inclined to see magic, but it’s just plagiarism. Thus, the “babbling” about cognitive limits matters.

Probably both of these hypotheses have some truth to them. But who knows because there’s hardly time to read all the expert thoughts on the matter, especially when important models aren’t even public, and experts are disagreeing or fighting for attention or confused. I think the super-plagiarism metaphor should be like a null hypothesis we can do some comparisons with later on, and rather we should keep the focus on what it means to coexist in a society with entities that can only be understood if we collectively share our expertise. That’s what I’m getting at here.

If my ignorance is showing, do enlighten me. I freely admit that I’m conjecturing about things I could really look more closely at. I just don’t have time to look really, as the pace of things can take on the look of a hopeless shell game.

Currently watching:

Noam Chomsky reference remarks about ChatGPT:

Chomsky reference passage on cognitive limits, with bonus me dancing.

Other videos I’ve been kind of watching:

“ML” playlist – https://youtube.com/playlist?list=PLh9Uewtj3bwkBwNZe75u7b5nvQaO4ETP6

Papers recently read:

None on this topic in months, but some of the ML playlist videos are visually focused on relevant pdfs and I am surprised by some of what I have seen in them. For example, simply having GPT-4 reflect on what it has just said, really noticeably makes a difference. I mean, of course, but still, it was a striking contrast with the claim that GPT-3 didn’t really improve using this reflection technique. The comparison graph is in one of the recent papers about ChatGPT and reflection, and discussed in one of the recent ML playlist videos linked above. No time to serve it to you on a silver platter tonight. I’ll edit this later if nuance is needed.

Noun: kaponion – the sort of shabby opinion one would expect a kapo to have.

See: kapo, opinion.

Example kaponion – “Once you’ve had a job for a week or two, it’s your responsibility to shit all over everyone when they aren’t working [sic]”

Plural noun: sleeple – first order pejorative term, or second order ironic term, for a political demographic seen to be asleep at the wheel.

Example usage: I’ve felt so sleepy recently, I’m at risk of becoming a member of set sleeple.

See: sheeple, daydreaming.

Contrast with: wolfle [plural noun] – demographic of hyper-vigilant individuals reinforced by the predation returns of both sheeple and sleeple.

Noun: Lobotomism – political ideology espousing the need to lobotomize most citizens, in the interest of stability. Where stable solutions for planetary systems, having billions of sentient individuals, are very difficult to find, reducing citizen complexity could mean the difference between having a model for some amount of time, and not having one.

Example application: resolving the tension between libraries and capitalism, by censoring the former.

See: lobotomy, capitalism, monarchism, eusociality, individuality.

Contrast with: “optimism” – a political ideology, that assumes the returns from superrational actions can dynamically find stable solutions, by having a sandbox for citizen activities that otherwise would warrant a good old fashioned round of lobotomies if everyone were to do them.

This is part two in a series of explorations looking into “ChatGPT”. Here I look at how it compares to an important model of analogy-making in the field of cognitive science, as well as looking at how to save the transcript of a chat.

In a spasm of inspiration, I wanted to use ChatGPT on my phone while at the gym this morning. After having an inspired chat about analogies with it, I wanted to save the record of our conversation, but I could see no way of doing so from the OpenAI interface, or even printing to pdf from the Brave mobile browser I was using. Scroll screenshot was also not able to understand which part of the screen to scroll while taking the screenshot. It then occurred to me that I could ask it to output a LaTeX transcript of the conversation, which I could copy and paste to a note file on my phone. It did this, but it ended up being quite confusing to write about later, and I think it’s worth telling a bit of that story, real quick.

I say it was confusing because the LaTeX output that ChatGPT produced, was incomplete, and therefore could not be compiled. It was no big deal really, as I just needed to manually paste in the remainder of the transcript and close the document with the “/end{document}” command. But I wanted to emphasize this missing output, and my confusions began when I found myself having to edit ChatGPT’s output, to include the italics “\textit{}” command: I was in effect modifying the transcript. Again, no big deal, but on top of this, if I was going to do this correctly, I needed to italicize “/end{document}” of the LaTeX code. The problem with this is that the closing “}” would have to come after the /end{document} command, which would obviously result in a compile error. At this point, words like “Quine” and “warning: escape characters will be needed for this, and don’t do it” started pulsing in my mind, where the difference between the source tex, and the rendered pdf it produces, is a different problem entirely from rendering a quote of the source tex. Yep. Obviously. Anyway, still too much recursion for my brain to handle, so I just fixed up the LaTeX, compiled it, then highlighted the last bit of text in the output pdf. Good enough.

However, simultaneous to these issues, I was testing out VS code for LaTeX, and there seems to be a frustratingly minimal default set of tools for writing LaTeX in VS code. In particular, the keybinding that should set “<cmd> i” to “\textit{…}” was not working. Despite several attempts to edit the mysterious keybindings.json configuration file in VS code, I was left having to write out \textit{…} whenever I wanted to use italics, which I found quite humiliating if I’m to be honest.

To bring it back to the original point, all of this was caused by the simple desire to save the chat log, which should obviously just be a normal feature of the interface. But the bigger purpose of the exploration today was to ask ChatGPT a couple minor skill-testing analogy questions: questions that I think have particular importance in characterizing the limits of ChatGPT’s intelligence.

Test 3. The Copycat test.

For ChatGPT tests [1-2], click here.

The “Copycat test”, as I call it here, can illuminate the subsymbolic concepts at work from the output of a blackbox, expressed in compounds of these “atomic” terms which are comparable to relations used in defining the axioms of arithmetic, such as: “successorship”, “first”, “identity”, and then many other domain-specific subconcepts, such as knowledge of the letters of the English alphabet, as in the case of Copycat. The problem Copycat is concerned with, is applying an appropriate analogical transformation to a string of letters, given a source analogy described by a sequence of letters of the alphabet, e.g., “ABC is to ABD, as DEF is to ???”. The nice thing about using these very elementary concepts as the building blocks of an analogical transform, is that it has an interpretable “perceptual structure” describing its decision. For this reason, Copycat, and its extension Metacat, are in the top three cognitive models dearest to my heart…Actually, if I’m saying top three, then I have to include Letter Spirit and Phaeco with them, as they are each superb exemplars of “fluid analogy” type models, just operating in different problem domains: letter sequence analogies, typeface design, and Bongard problems. I explain Metacat, poorly, in this video, but it’s a good video anyway imo. The discussion of ChatGPT continues below.

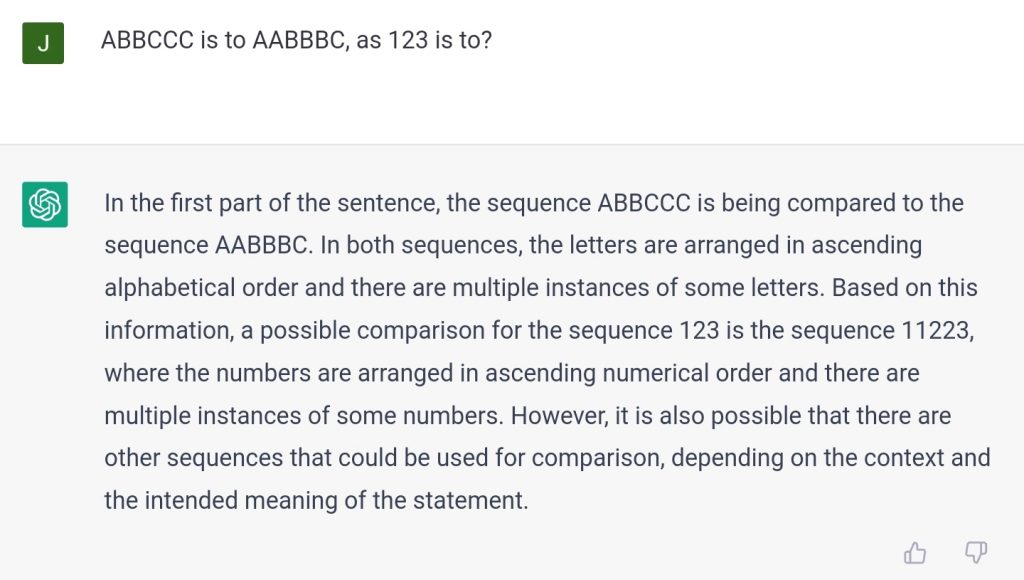

1. Prompt:

ABBCCC is to AABBBC, as 123 is to?

Output: “112223”.

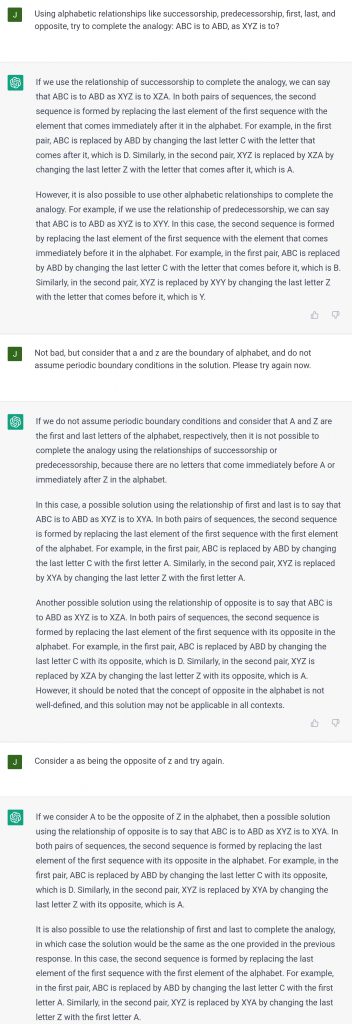

2. Prompt:

…ABC is to ABD, as XYZ is to?

Part 1, chat record.

Verdict: not bad, but not great.

Test 3.1.1 – It was unexpectedly difficult to judge the quality of ChatGPT’s “112223” answer. I had “231” in mind, and I think that is a simpler answer, but ChatGPT’s answer does actually work quite well in a way. They definitely make for an interesting comparison, the more I think about it. You can use “231” to tell you the number of repetitions of the first number, “1”, then the number “2” in the second position, and the number “3” in the third position, place in its solution “112223”.

Test 3.1.2 – This was a fail I thought, as both XYA and YXA are pretty poor answers. ChatGPT is seemingly unable to infer how the use of periodic or non-periodic boundary conditions, should change the model’s internal conceptual representation of the English alphabet. Does the alphabet wrap around so that “a” is the next letter after “z”? No, and that can be modulated by an intermediary “opposite” relation connecting the first and last letters of a sequence, like “a” and “z” in this case. Activating that “opposite” relation, is critical to coming up with clever, mirrored solutions to the analogy problem, such as WYZ or even DYZ.

And then this sentence that ChatGPT came up with to justify its answer at one point was baffling to me:

the analogy is ”book is to reading as fork is to eating,” the answer would be ”XYZ is to YXA” because both pairs of words are related to common activities (reading and eating).

Test 4. Export the chat record as LaTeX

Prompt:

Can you format this conversation log in LaTeX?

Output: “Yes, I can format this conversation log in LaTeX. Here is an

example of how it might look:”

ChatGPT_prompt_3_2_2_Copycat_compiled_latex

Part 2, chat record: compiled.

Verdict: decent.

Pretty good, but the section headers are redundant a bit and it abruptly cut itself off at the green highlighted bit in the pdf.