Wootcrisp

Thomas Cochrane

Samurai Jack

Peep again

#6

Wim Hof

Pro squash player

Napoleon

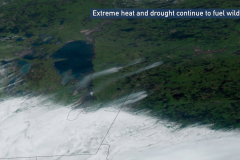

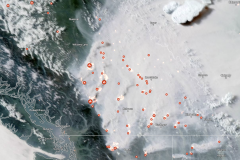

I think it is helpful to the global perspective needed to address climate change to actually see the increasing number of northern forest fires from the spaceship perspective. Looking down on these eruptions of flame could remind us of the consequences of thoughtless behaviours, in particular, the dangerous overheating you might expect from drunkenly red-lining any spaceship making its way through our universe.

Products for thermoregulation, health and safety, and productivity, in the age of heat waves and forest fires.

The summer forest fire season here in British Columbia has been driving me crazy for many years now, and to alleviate some of the nuisance with it all I have tried out many types of consumer products that seemed like they could help. With each of these products I say whether or not I have tried them and I give a subjective rating out of ten.

1. Personal electric air purifier.

A couple months ago I bought a personal electronic air purifier and I think it might actually be useful this summer. 6/10.

2. Indoor air purification.

i) Low cost solution: box fan with dual Merv 13s. Untested.

Based on tutorials like this: https://marshallhansendesign.com/2012/01/02/studio-operations/

I only just ordered this fan so I can’t give a personal testimony, but these are the parts I’m going to be working with:

FilterBuy 20x20x1 MERV 13 Pleated AC Furnace Air Filter, (Pack of 4 Filters), 20x20x1 – Platinum

ii) Moderate cost solution: standard portable air purifier. 6/10.

Bionaire BAP600-CN 99-Percent Permanent HEPA Air Purifier with Night Light

ii) Expensive solution: Industrial air purifier. Untested.

EnviroKlenz Home/Office Air Purifier.

3. Fireproof document organizer.

4. Fire extinguisher.

I cannot personally testify to the performance of this particular fire extinguisher, but it’s similar to mine, and listed at a pretty good price given that it has free shipping. It’s your classic “A:B:C class” extinguisher: “For use on Class A (ordinary combustibles), Class B (Flammable liquid) spills or Fires involving live electrical equipment (Class C)”. Untested.

Amerex B402 ABC Multi-Purpose Fire Extinguisher, 5 lb.

5. Personal misting and cooling.

I have tested a number of personal accessories meant to keep you clean and cool, such as: gel masks, cooling headbands, shemaghs, gel hats, cooling patches, neck gaiters, cooling mats, and spray bottles.

i) Ice Eye Mask by FOMI Care. 3/10

ii) Ergodyne Chill Its 6700CT Cooling Bandana, Lined with Evaporative PVA Material. 7/10

iii) Headsweats Protech Hat. 5/10

iv) 20 Pcs Cooling Gel Fever Patches,Cooling Forehead Strips. 1/10

v) Ergodyne Chill-Its 6487 Cooling Neck Gaiter. 4/10

vi) Ergodyne Chill-Its 6602 Evaporative Cooling Towel. 3/10

ix) COREGEAR USA Misters Personal Water Mister Pump Spray Bottle. 10/10

6. Indoor cooling.

I don’t recommend portable air conditioners like the one I have for the reasons detailed here:

i) Low cost solution: evaporative “swamp cooler”.

Consumers typically find these things to be underwhelming for several reasons: they raise humidity and they need to be replenished enough to be annoying. I have never used one, but I thought I would include the highest rated one I could see on Amazon in case it interests some readers.

ii) Expensive solution: window air conditioner.

MIDEA MAW05M1BWT Window Air Conditioner, 5000 BTU. Untested.

7. Bed cooling.

i) Moderate cost solution: directional attachments for your AC unit. 9/10.

Rather than buy an expensive cooling mattress, I have found it fruitful to jam a rectangular duct connector on to my AC unit and run a hose into my bed or to my laptop dock.

Imperial Manufacturing Duct End Boot 3-1/4 X 10 X 4In GV0650

POWERTEC 70150 Rectangular Dust Hood for 4-Inch Hose

Hon&Guan 4 inch Air Duct – 32 FT Long

ii) Expensive solution: specialized bed cooling unit. Untested.

8. Other things I would like to try.

i) Cooling shirts.

Sony launched the Reon Pocket but it’s not available in North America yet for some reason.

If you want to buy a cooling shirt here in North America, you’ll have to settle for something like this:

Ergodyne Chill-Its 6665 Evaporative Cooling Vest

ii) Outdoor misting systems.

iii) Door seals.

A small success story for my week has just wrapped up. There is a large, unpopular, academic publishing company named “Elsevier” that, up until now, I have had to accept as an intimate part of life. Several years ago they acquired the pdf organizing app I was using called “Mendeley“. Mendeley was fantastic really. It did everything you wanted it to do, like: rename pdf file names to a standard format like “<author>_<date>.pdf”, store them where you want them stored, automatically fill-out metadata by extracting the article doi, use browser-like tabs for the pdfs currently open, set the proportion of screen associated with an article versus its metadata, export pdfs with standard pdf annotations, easily switch citation styles for bibliography items, not cost anything, shrug it off when you go over your 2gb account storage, and have a basic phone app that syncs and reads the stored pdfs. So when I read that Elsevier had bought Mendeley, I had a good idea of what I wanted when I looked into alternatives: in particular, the “open” alternatives Zotero and Calibre. What I quickly saw with them, was that they were indeed promising efforts, but they were certainly not the polished F-35 of Mendeley.

First off, both of these programs made you use the system default program for opening pdfs: that is a bad sign. It means there is a disconnect between the program and how the program is being used: like, you’re not going to be searching your pdf comments using the pdf organizer’s search bar, never mind all the other ways a pdf might be annotated by a separate program that effectively becomes a second life for that pdf as there’s no guarantee your pdf organizer will understand or act on these annotations. But, with the use of some plugins and a bunch of reading of the Calibre and Zotero manuals, I could see that one day I would be back to use them for real.

I shrewdly put Calibre on “book pdf duty” since books were too large to keep in the Mendeley library. The basic functionality for organizing the library was there, and the <epub/mobi/pdf> conversion plugin always did me right whenever I would have to immediately kill an “epub” or “mobi” by converting it to pdf. However, it was absolutely out of contention as my daily driver for journal articles simply based on the fact that doi lookup based file renaming didn’t work out of the box, making new additions to the the library look like a list of “Unknown” and “<!DOWNLOADED FROM EBOK>” titles. Zotero was similarly advertised as an open project, but whatever it may have done better than Calibre, the account you make for syncing was limited to something like 300mb. As it was a problem to configure around this right out of the box I put it aside, but I did decide to keep it, as though it may have felt like riding a wildebeest at the time, it also felt closer to one day learning from Mendeley’s panther style.

Then a few months ago, my Mendeley Android app notified me that it was being discontinued soon, and then it was discontinued and would no longer open. Just like that. I spent years with this app.

Fast forward to this week, and I have now spent months living with the consequences of this amputation. I attempted to put the Calibre database in a Nextcloud folder to sync with my phone, but the weirdness of syncing the database folder while another device is also synced and might also be doing something else to that file was ultimately an insurmountable hurdle for the few hours I had to figure it out. Was the Calibre Android app really even a good replacement for the Mendeley Android app? A recent sense of urgency pushed me to look into the existence of self-hosting packages for Zotero that might work around the 300mb limitation. There were in fact several options I could see, like a Docker server or one that runs an npm server on port 8081, but as you can imagine I was stoked for neither of those options. And then I found the just released “Zotero beta“, which allows you to sync files privately without storage limits using “webdav” which my Nextcloud server is able to use. So I imported my Mendeley library, synced it to my phone, and in mere minutes Elsevier had finished expelling me as a user.

Holliday:

ytcracker:

Ashour:

Square:

#6:

me:

The faster I run, the faster my to-do list grows, and the faster I see I must run. Every day I swat angrily at the varyingly important tasks that past me has given to present me.

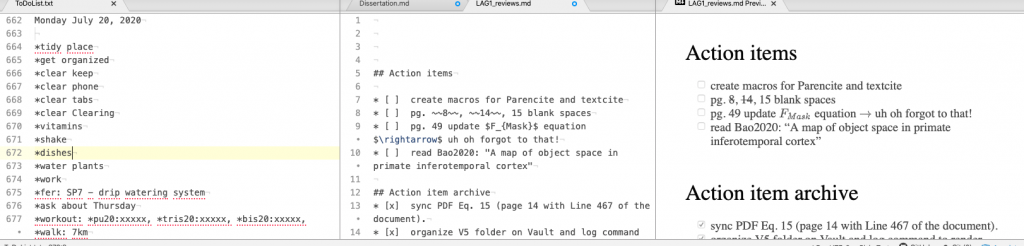

The task list is in chronological order, chunked by day, using a “*” prefix to indicate complete. Had I proper foresight I tell myself, I would have at least used Markdown when I started it, where instead of a * I would have used “* [ ]” and “* [x]” to prefix each line. There are legitimate pros and cons to consider using Markdown however. In this case the most practical benefits of it are that you can denote headings with # or ## or ###, and a strike-through with ~~[item]~~ but you can also make basic tables and embed images the same way you would with html. However, that latter stuff is a little too fine-dining for a personal to-do list in my opinion, as time is really of the essence.

If you’re needing a professional looking to-do list for work or something, then the trade-offs are slightly different. Pure Markdown has some issues. First, you’ll notice that you need to put two spaces at the end of each line for a line break. That’s not very “time is of the essence” in my opinion. And then you will note that rendering Markdown requires a text editor that can do that, and I will not seriously suggest using pandoc terminal commands for a simple to do list, and for a more exaggerated form of that same reason I do not recommend reStructured text for a to-do list. So for text editing I recommend Atom as it has quite a buffet of packages that are easy to install and allow you to do things like mix Markdown with Latex. While this can tempt you into breaking convention—and therefore portability—because of the idiosyncratic set of packages that will be needed to properly interpret your document, it is easy to imagine recovering that portability with a series of package installation calls at the start of your document like so:

apm install language-latex

apm install language-markdown

…

I should also note that QOwnNotes has treated me well for many years as it is very specifically designed to sync with Owncloud/Nextcloud and I’m hesitant to uproot that system for something like “atom-ownsync” which doesn’t seem to get updated very often.